How origins and pools become unhealthy

When we talk about dynamic load balancing, that means your load balancer only directs requests to servers that can handle the traffic.

But how does your load balancer know which servers can handle the traffic? We determine that through a system of monitors, health monitors, and origin pools.

Components

Dynamic load balancing happens through a combination of origin pools1, monitors2, and health monitors3.

How an origin becomes unhealthy

Each health monitor request is trying to answer two questions:

- Is the server offline?: Does the server respond to the health monitor request at all? If so, does it respond quickly enough (as specified in the monitor’s Timeout field)?

- Is the server working as expected?: Does the server respond with the expected HTTP response codes? Does it include specific information in the response body?

If the answer to either of these questions is “No”, then the server fails the health monitor request.

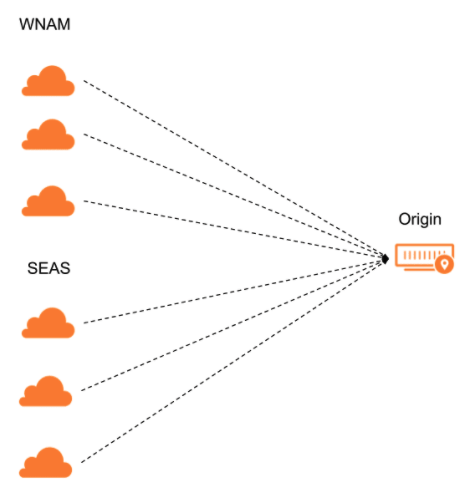

For each option selected in a pool’s Health Monitor Regions, Cloudflare sends health monitor requests from three separate data centers in that region.

If the majority of data centers for that region pass the health monitor requests, that region is considered healthy. If the majority of regions is healthy, then the origin itself will be considered healthy.

Load balancing analytics and logs will only show global health changes.

For greater accuracy and consistency when changing origin health status, you can also set the consecutive_up and consecutive_down parameters via the Create Monitor API endpoint. To change from healthy to unhealthy, an origin will have to be marked healthy a consecutive number of times (specified by consecutive_down). The same applies — from unhealthy to healthy — for consecutive_up.

How a pool becomes unhealthy

When an individual origin becomes unhealthy, that may affect the health status of any associated origin pools (visible in the dashboard):

- Healthy: All origins are healthy.

- Degraded: At least one origin is unhealthy, but the pool is still considered healthy and could be receiving traffic.

- Critical: The pool has fallen below the number of available origins specified in its Health Threshold and will not receive traffic from your load balancer (unless other pools are also unhealthy and this pool is marked as the Fallback Pool).

- Health unknown: There are either no monitors attached to pool origins or the monitors have not yet determined origin health.

- No health: Reserved for your load balancer’s Fallback Pool.

Traffic distribution

When a pool reaches Critical health, your load balancer will begin diverting traffic according to its Traffic steering policy:

Off:

- If the active pool becomes unhealthy, traffic goes to the next pool in order.

- If an inactive pool becomes unhealthy, traffic continues to go to the active pool (but would skip over the unhealthy pool in the failover order).

All other methods: Traffic is distributed across all remaining pools according to the steering policy.

Fallback pools

This pool is meant to be the pool of last resort, meaning that its health is not taken into account when directing traffic.

Fallback pools are important because traffic still might be coming to your load balancer even when all the pools are unreachable (disabled or unhealthy). Your load balancer needs somewhere to route this traffic, so it will send it to the fallback pool.

How a load balancer becomes unhealthy

When one or more pools become unhealthy, your load balancer might also show a different status in the dashboard:

- Healthy: All pools are healthy.

- Degraded: At least one pool is unhealthy, but traffic is not yet going to the Fallback Pool.

- Critical: All pools are unhealthy and traffic is going to the Fallback Pool.

If a load balancer reaches Critical health and the pool serving as your fallback pool is also disabled:

- If Cloudflare proxies your hostname, you will see a 530 HTTP/1016 Origin DNS failure.

- If Cloudflare does not proxy your hostname, you will see the SOA record.

Groups that contain one or more origin servers. ↩︎

Are attached to individual origin servers and issue health monitor requests at regular intervals. ↩︎

Which are issued by a monitor at regular interval and — depending on the monitor settings — return a pass or fail value to make sure an origin is still able to receive traffic. ↩︎